Hello!

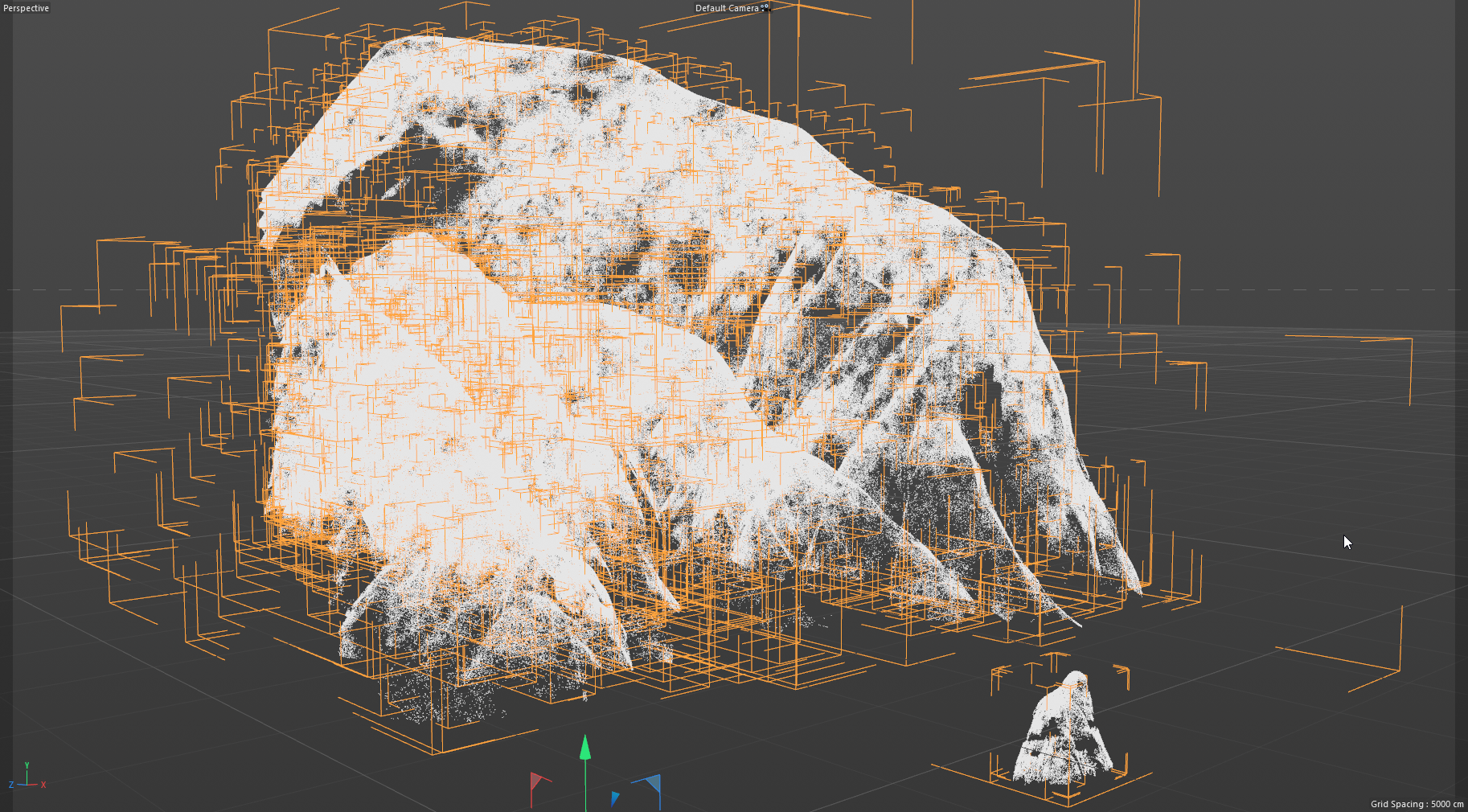

I'm revisiting the Moana Island data set and I'm making great progress; I've got almost all of the assets converted into Redshift Proxies.

The biggest problem I'm currently facing is a 3GB JSON file that defines renderable curves on the largest mountain asset. I don't know exactly how many curves are defined in this file, but based on the curve count and data size of other JSON files I think its roughly 5.2 million curves. Each point of the curve is an array with 3 items, each curve is an array of N points, and the curves are stored inside of a top level array.

The built-in json module must load the entire file into memory before operating on it. I've experienced extremely poor behavior on any JSON file over 500MB with the json module so I am instead parsing the files with ijson which allows for iterative reading of the JSON files as well as a much faster C backend based on YAJL.

Using ijson I was able to read an 11GB file that stored transform matrices for instanced assets on the beach. However, even using ijson I cannot seem to build a spline from the curves in this 3GB file (I gave up after letting the script run for 12 hours). I have a suspicion it has more to do with the way I'm building the spline object than parsing the data. So I have some questions. Is there a performance penalty for building a single spline with millions of segments? Should I instead build millions of splines with a single segment? Or would it be better to try and split the difference and build thousands of splines with 10,000 segments each?

I've done a little performance testing with my current code and right now it takes 10 minutes 13 seconds to build a single spline out of the first 100,000 curves in the file. However, if I build just the first 10,000 curves it only takes 5 seconds.

I'm leaning heavily toward chunking the splines into 10,000 segment batches but I want to first see if my code could be further optimized, here is the relevant portion:

curves = ijson.items(json_file, 'item', buf_size=32*1024)

#curves is a generator object that returns the points for each segment successively

for i, curve in enumerate(curves):

#for performance testing I'm limiting the number of segments parsed and created

if i > num:

break

point_count += len(curve) #tracking the total number of points

segment_count += 1 #tracking the number of segments

spline.ResizeObject(point_count, segment_count) #resizing the spline

for id, point in enumerate(reversed(curve)):

spline.SetSegment(segment_count-1, len(curve), False)

spline.SetPoint(point_count-id-1, flipMat * c4d.Vector(*(float(p) for p in point)))

spline.Message(c4d.MSG_UPDATE)